Enviado por

common.user1524

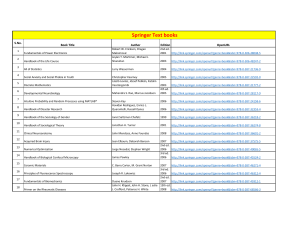

Kent A. Peacock - The Quantum Revolution